By Daniel Roarty, CIO of sustainable thematic equities, and Ben Ruegsegger, portfolio manager of sustainable thematic equities at AllianceBernstein

There is a big buzz around artificial intelligence and its potential to change the world. But much less has been said about its energy footprint.

‘Generative’ AI—which uses machine learning to generate content—is wildly popular, as illustrated by OpenAI’s ChatGPT and countless other applications promising enhanced productivity and business benefits. But here’s the rub: AI requires massive computational power to train models. And that raises a thorny issue: the energy impact of AI.

See also: AI in the UK: Preventing the predictable decline from inventor to has-been

Companies helping to solve this energy conundrum could enable a sustainable future for this burgeoning technology and create opportunities for equity investors.

Generative AI Is an Energy Hog

There are two primary stages behind the magic of machine learning. Training, which involves gathering the information needed for machines to create a model, and inference, whereby the machine uses this model to generate content, analyse new data and produce actionable results.

All of this requires energy. And the more powerful and complex the model, the more energy required.

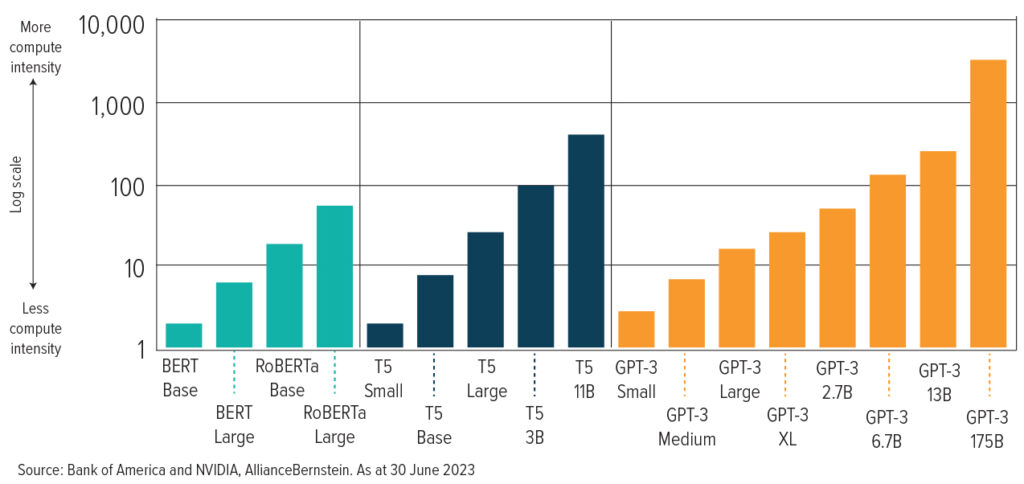

Training time for AI models in petaFLOP/s-days (which measures number of days required to train a particular model)

OpenAI’s GPT-3 model is illustrative. The energy needed to train GPT-3 could power an average American’s home for more than 120 years, according to Stanford University. Meanwhile, Bay Area chipmaker NVIDIA notes that energy requirements for training models that include transformers—a form of deep-learning architecture—have increased by 275 times every two years.

The Many Sources of Energy Consumption

AI’s energy consumption will come from many corners, ranging from the maintenance of large models to AI-assisted products like search and chatbots.

This need for energy will ultimately accelerate the construction of power-hungry data centres, which already account for nearly 1% of global energy use, according to the International Energy Agency. On the plus side, however, increasingly complex models will require the use of more specialised graphics processing units (GPUs), which deliver higher performance-per-watt than traditional central processing units (CPUs). This shift could offset the overall power requirements to train and run AI models.

See also: Kermitted Asset Management: AI’ll be back

Emissions are also an issue. In particular, investors are pushing companies to measure Scope 3 emissions, which constitute upstream and downstream emissions that can be difficult to quantify. As AI use increases, data users’ Scope 3 emissions—including firms that traditionally have low carbon footprints—are likely to grow correspondingly.

How Are Companies Addressing the AI Energy Conundrum?

Companies central to and on the periphery of AI are beginning to address the enormous associated energy challenge. Investors should pay attention to three key areas:

Hardware and Software: Reducing AI-related energy use will require new processor architectures. US semiconductor makers like AMD and NVIDIA are focused on delivering more energy-efficient performance. AMD has set a goal of increasing the energy efficiency of its processors and accelerators used in AI training and high-performance computing by 30 times over a five-year period. According to NVIDIA, its GPU-based servers in some applications use 25 times less energy than CPU-based alternatives. As GPUs take share from CPUs in data centres, energy efficiency should increase.

Conserving energy will also require advanced transistor-packaging techniques. Technologies such as dynamic voltage frequency scaling and thermal management will be required to produce more efficient machine learning. Semiconductor chip companies, including TSMC and ASML, will likely have a significant role to play in bringing these new innovations to market.

See also: How to tap into the AI boom via investment trusts

Investors will also be hearing more about power semiconductors, which help improve the power management of AI servers and data centres. Power semiconductors regulate current and can lower overall energy use by integrating more functionality in smaller footprints. Firms like Kirkland, Monolithic Power Systems and Infineon Technologies are at the forefront.

Improvements in Data Centre Design: As AI adoption fuels the expansion of data centre capacity, firms that supply data centre components could reap benefits. Key components include power supplies, optical networking, memory systems and cabling.

Coming full circle, companies are using AI to optimise data centre operations. In 2022, for example, Google DeepMind trained a learning agent called BCOOLER to optimise Google’s data centre cooling procedures. The result: a roughly 13% energy saving. Energy efficiency is improving in data centres, even with growing numbers.

Renewables: While renewables made up just 21.5% of US electricity generation in 2022, AI demand could open the door for increased use over time. AI data centres will be operated by the likes of Microsoft and Alphabet, whose net zero policies are among the industry’s best. Therefore AI could improve the investment prospects of all renewables.

In all these areas, investors should search for quality companies with a technological advantage, persistent pricing power, healthy free-cash-flow generation and resilient business models. Companies with strong fundamentals that are poised to participate in and benefit from increased demand for energy-efficient AI capabilities could provide attractive opportunities for equity investors with a sustainable focus.

Initiatives aimed at creating a more energy-efficient AI ecosystem might not be in the spotlight now, but they could eventually unlock attractive return potential for investors who can spot the potential solutions early.